|

I am a Research Assistant in Computer Science at TAMS. My primary research interests lie in the intersection of AI and physics simulator. I started my academic career from Harbin Institute of Technology in 2014. Under the guidance of Prof. Jianwei Zhang, I earned my Ph.D. in 2022 from Universit of Hamburg. Beyond my academic endeavors, I have a keen interest in crafting science and engineering projects in my spare time, which are displayed in the 'Leisure Pursuit' section. Email | CV | Google Scholar |

|

|

|

| Dec | 2023 | Presented our paper on SIGGRAPH ASIA in Sydney. |

| Sep | 2023 | One paper on human motion reconstruction accepted to SIGGRAPH ASIA. |

| Aug | 2023 | Honored to win the second prize (9/5705 submissions globally) in HICOOL 2023, and receive a landing reward of $150,000. |

| Aug | 2023 | Honored to win the second prize in China Innovation & Entrepreneurship International Competition, and receive a cash reward of $8000. |

| Feb | 2023 | One paper on object manipulation in simulation accepted to ICRA 2023. See you in London! |

| Jan | 2023 | One paper on sim-to-real skills transfer accepted to Transactions on Cognitive and Developmental Systems. |

| Apr | 2022 | One paper on multimodal reinforcement learning in simulation accepted to RA-L and will be presented on ICRA. |

| Feb | 2022 | One paper on visual reinforcement learning for sim-to-real transfer accepted to Frontiers in Neurorobotics. |

| Oct | 2021 | One paper on self-supervised attention learning to ROBIO. |

| July | 2020 | One paper on skills learning and sim-to-real transfer accepted to IROS. See you in Las Vegas! |

|

My research endeavors are centered around robotics simulation and deep reinforcement learning, with a specialized focus on 3D physics engine. Below is a curated list of my scholarly works. |

|

|

Lin Cong*, Philipp Ruppel*, Xiang Pan, Yizhou Wang, Norman Hendrich, Jianwei Zhang (*Equal Contribution) Siggraph Asia, 2023 project page | paper | video | code |

|

|

Hao Zhang, Hongzhuo Liang, Lin Cong, Jianzhi Lyu, Long Zeng, Pingfa Feng, Jianwei Zhang ICRA, 2023 project page | arXiv | video |

|

Yunlei Shi, Chengjie Yuan, Athanasios Tsitos, Lin Cong, Hamid Hadjar, Zhaopeng Chen, Jianwei Zhang IEEE Transactions on Cognitive and Developmental Systems, 2023 paper | video |

|

|

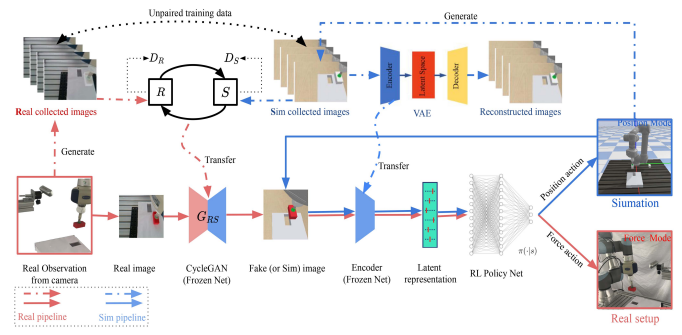

Lin Cong, Hongzhuo Liang, Philipp Ruppel, Yunlei Shi, Michael Görner, Norman Hendrich, Jianwei Zhang Frontiers in Neurorobotics, 2022 project page | paper | video |

|

|

Lin Cong*, Hongzhuo Liang* Norman Hendrich, Shuang Li, Fuchun Sun, Jianwei Zhang (*Equal Contribution) RA-L & presented at ICRA, 2022 project page | paper | video |

|

|

Lin Cong, Yunlei Shi, Jianwei Zhang ROBIO, 2021 project page | paper |

|

|

Lin Cong, Michael Görner, Philipp Ruppel, Hongzhuo Liang, Norman Hendrich, Jianwei Zhang IROS, 2020 project page | paper | video |

|

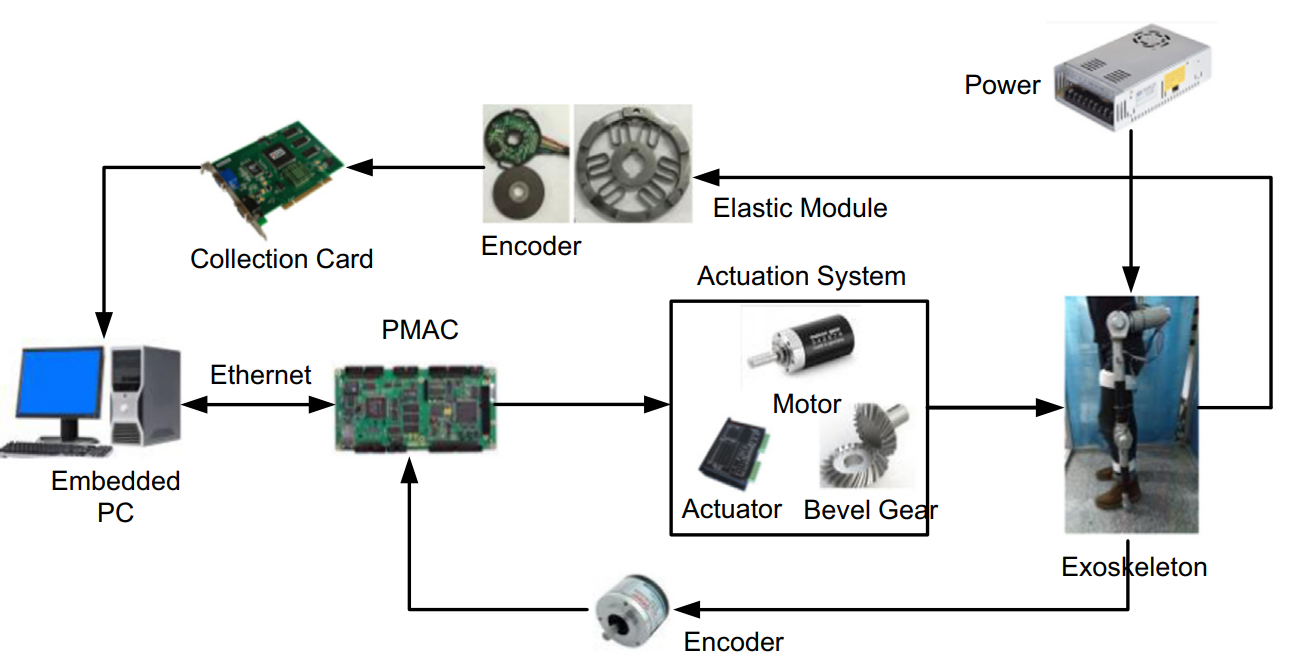

Yi Long, Zhijiang Du, Lin Cong, Weidong Wang, Zhiming Zhang, Wei Dong ISA Transactions, 2017 paper |

|

Beyond academic research, my interests span a diverse range of fields. This includes delving into the world of hardware, where I explore sensors and circuit boards, immersing myself in the realm of Virtual Reality (VR) equipment, experimenting with game engines, and even crafting my own robots. |

|

|

Lin Cong Spare time project, 2022 project page |

|

|

Lin Cong Spare time project, 2022 project page |

|

|

Lin Cong Spare time project, 2021 project page | code |

|

Template adapted from this awesome website. |